by Vanessa Thomas

Like a tornado siren for life-threatening storms in America’s heartland, a new computer model that combines artificial intelligence (AI) and NASA satellite data could sound the alarm for dangerous space weather.

The model uses AI to analyze spacecraft measurements of the solar wind (an unrelenting stream of material from the Sun) and predict where an impending solar storm will strike, anywhere on Earth, with 30 minutes of advance warning. This could provide just enough time to prepare for these storms and prevent severe impacts on power grids and other critical infrastructure.

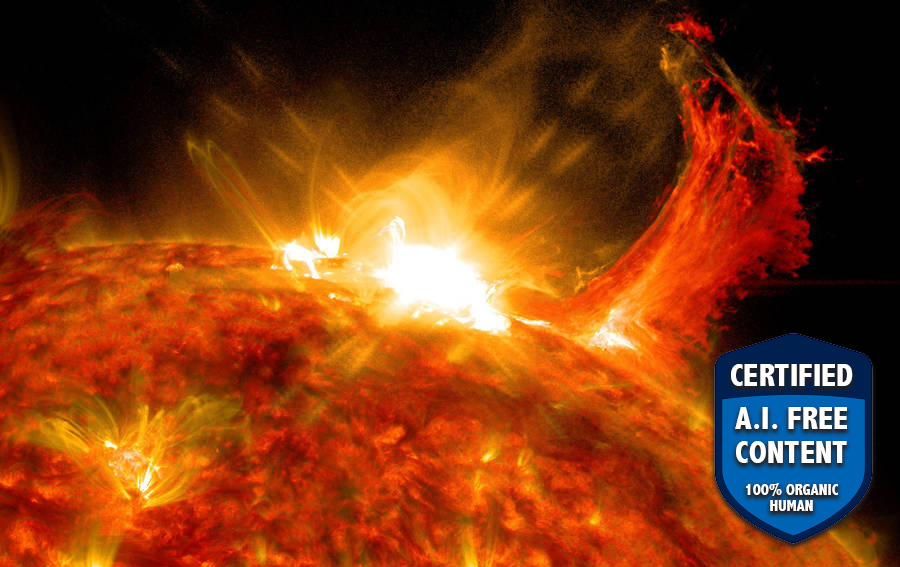

The Sun constantly sheds solar material into space – both in a steady flow known as the “solar wind,” and in shorter, more energetic bursts from solar eruptions. When this solar material strikes Earth’s magnetic environment (its “magnetosphere”), it sometimes creates so-called geomagnetic storms. The impacts of these magnetic storms can range from mild to extreme, but in a world increasingly dependent on technology, their effects are growing ever more disruptive.

For example, a destructive solar storm in 1989 caused electrical blackouts across Quebec for 12 hours, plunging millions of Canadians into the dark and closing schools and businesses. The most intense solar storm on record, the Carrington Event in 1859, sparked fires at telegraph stations and prevented messages from being sent. If the Carrington Event happened today, it would have even more severe impacts, such as widespread electrical disruptions, persistent blackouts, and interruptions to global communications. Such technological chaos could cripple economies and endanger the safety and livelihoods of people worldwide.

In addition, the risk of geomagnetic storms and devastating effects on our society is presently increasing as we approach the next “solar maximum” – a peak in the Sun’s 11-year activity cycle – which is expected to arrive sometime in 2025.

To help prepare, an international team of researchers at the Frontier Development Lab – a public-private partnership that includes NASA, the U.S. Geological Survey, and the U.S. Department of Energy – have been using artificial intelligence (AI) to look for connections between the solar wind and geomagnetic disruptions, or perturbations, that cause havoc on our technology. The researchers applied an AI method called “deep learning,” which trains computers to recognize patterns based on previous examples. They used this type of AI to identify relationships between solar wind measurements from heliophysics missions (including ACE, Wind, IMP-8, and Geotail) and geomagnetic perturbations observed at ground stations across the planet.

From this, they developed a computer model called DAGGER (formally, Deep Learning Geomagnetic Perturbation) that can quickly and accurately predict geomagnetic disturbances worldwide, 30 minutes before they occur. According to the team, the model can produce predictions in less than a second, and the predictions update every minute.